Click here to go see the bonus panel!

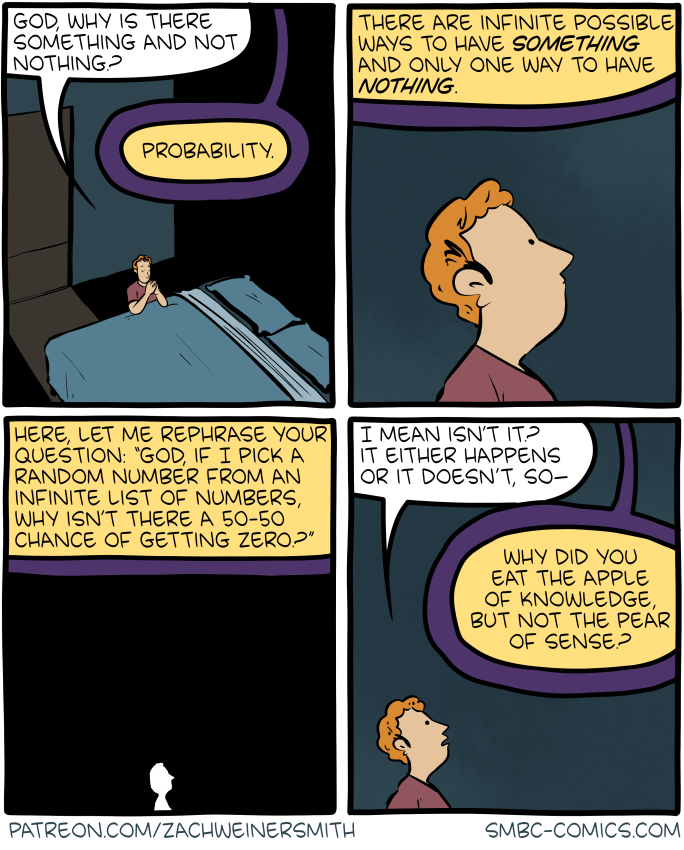

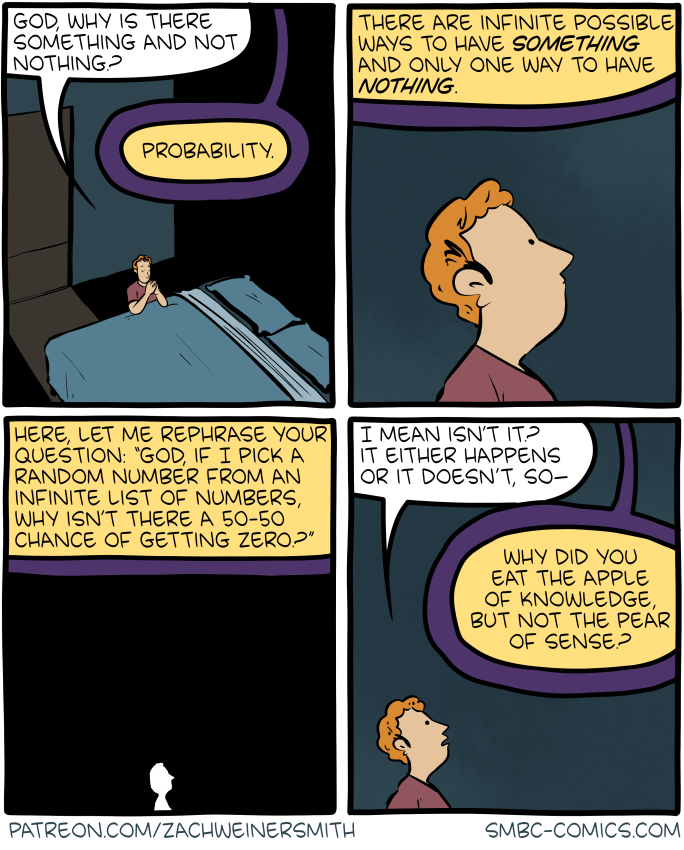

Hovertext:

The avocado of not being such a dick would've been nice as well.

Today's News:

Hovertext:

The avocado of not being such a dick would've been nice as well.

Prescribed fires—or the lack of them—are on everybody's lip these days. Most people are just parroting talking points for political purposes, but perhaps you'd actually like to be a little smarter about it? Sure you would. It's a weekend and you have some spare time.

So let's go through some history and then some current events. It's a little long but worth it.

For most of modern US history, deliberately setting fires was considered folly and public policy preached exactly the opposite. This wasn't due to ignorance; it was due to what people saw with their own eyes. Here's the history of intense wildfires in the western US:

During the long era of occupation by native Indian tribes, who had adopted a cultural practice of using fire to manage the land, big wildfires mostly followed the climate. During periods of drought or warmth, fire activity went up. During periods of rain and cool temps, it went down.

When American settlers came to the West in the 19th century, they happened to arrive at a time when wildfire outbreaks were rising steeply. They concluded—not unreasonably—that the indigenous practice of setting controlled fires was ineffective at best and probably actively harmful. So they replaced it with a strategy of fire suppression—i.e., the practice of putting out wildfires immediately. It first began as a deliberate policy in New York in 1885, and the Santiago fire in 1889 led California to follow along. In 1910 the "Big Blowup," a massive fire in Montana that burned 3 million acres in two days, caused the US Forest Service to make it official policy for the entire country:

The 1910 fires had a profound effect on national fire policy. Local and national Forest Service administrators emerged from the incident convinced that the devastation could have been prevented if only they had had enough men and equipment on hand. They also convinced themselves, and members of Congress and the public, that only total fire suppression could prevent such an event from occurring again.

It worked: as the timeline shows, fire activity dropped to nearly a record low in the 20th century. In 1935 the Forest Service adopted the "10 am rule," a mandate to put out every fire by 10 am. This continued through 1978, when the Forest Service started to allow some natural lightning fires to burn. But they still didn't allow prescribed burns as an active strategy.

And why would they? It was just bad luck that the history of white settlement in the West coincided with a big increase in fires, but nobody knew that. Likewise, the fire suppression strategy was sowing the seeds of its own destruction by letting fuel pile up, but no one knew that either. All the evidence seemed to suggest that fire suppression worked.

What's more, even when change did come, it came haltingly because loosening the rules had downsides as well as upsides. In 1988 the US Park Service allowed several lightning fires to burn in Yellowstone, eventually causing a conflagration that consumed over a million acres. Public fury was intense. In a post-mortem after the fire:

The team reaffirmed the fundamental importance of fire’s natural role but recommended that fire management plans be strengthened.... Until new fire management plans were prepared, the Secretaries suspended all prescribed natural fire programs in parks and wilderness areas.

Natural burns returned the next year, and in 1994 the South Canyon fire, which killed 14 firefighters, led to the first comprehensive review and update of federal wildland fire policy in decades. This was change at a snail's pace. But by the late '90s, as forest managers finally started to understand the danger of long-term fuel buildup in an era of drought and climate change, they began to use deliberate controlled burns as an active strategy for limiting the damage of forest fires. Once again, though, there were downsides:

A prescribed fire set by fire managers on the Bandelier National Monument in 2000 was declared a wildfire and escaped onto the adjacent Santa Fe National Forest. The fire burned into the Los Alamos National Laboratory and the town of Los Alamos. Over 48,000 acres were burned and 255 homes destroyed before it was extinguished. Public outcry was immediate and the National Park Service held an investigation that placed blame on improper implementation of the prescribed burn and on inadequate contingency resources to successfully suppress the fire.

In fact, the burn was most likely done correctly but fell victim to sudden winds that hadn't been in the weather forecasts. A review reaffirmed policy and led to gradually increased use of prescribed fires over next few years. Nationwide, prescribed burns have increased more than 150% since 1998:

It's worth noting that the vast majority of these burns take place in the South, where they've been a way of life for some time. The rest of the country combined, including the West, is responsible for only about 20% of controlled burns.

There are a few things to take away from all this. First, the longstanding opposition to prescribed burns has never been about California. It's nationwide and was due primarily to US Forest Service policies that set a standard for the whole country.

Second, prescribed burns have both upsides and downsides. The upside is that they help prevent wildfires from spreading, especially to populated areas. The downside is that sometimes they get out of control and produce understandable public opposition. They also generate large amounts of smoke, which is both annoying and a genuine health hazard.

Third, prescribed burns are expensive and underfunded. In 2022, the Biden administration announced a plan to reduce the fire risk on 50 million acres of land, an effort it figured would cost around $50 billion. But it was only partially funded with $3 billion from the 2021 infrastructure act.

Finally, prescribed burns can only be done on certain days: not too hot and not too cold (or rainy). Forest managers declare specific days, mostly in spring and fall, as "burn days," but climate change has steadily eroded that window. Fewer and fewer days, especially in the West, are safe for prescribed burns.

This is a particular problem for California, which is naturally hot, frequently windy, and has lousy air quality to begin with. This means that the window for controlled burns—not too hot, not too cold, not too windy, air quality not too bad—is especially short. Partly as a result of this, prescribed burns in California have increased recently, but not as much as in the rest of the country:

Another thing that's a particular problem for Southern California is that most of the chaparral and brush is in hilly and mountainous areas:

Managing the grasses, bushes and shrubs on these hillsides is “physically impossible” [Zeke] Lunder said “You would have to send someone down on rope with a chainsaw like an African honey collector to cut the brush.”

And even if you do manage to clear out the vegetation—either with fire or chainsaws—it will grow back quickly because these are native plants adapted to the climate. And in the meantime you've turned the hillsides into massive mudslide machines the next time it rains. This is not a great tradeoff.

So California has been late to the prescribed burn revolution, but it's recently gotten on the bandwagon. In 2018 Gov. Jerry Brown set a goal of burning 500,000 acres per year by 2023—though this was cut back a couple of years later by Gov. Gavin Newsom to 400,000 acres by 2025. In 2021 Newsom signed a bill that protected private landowners who want to do prescribed burns, and later in the year approved $1.5 billion for wildfire management. In 2023 California Democrats urged the EPA not to restrict burns via new environmental rules. Later in the year Newsom signed a law that ramped up the effort to meet the 2025 goal. But it's a tough goal to reach largely because of resource constraints. There just aren't enough trained burn bosses to meet demand:

Federal and state fire agencies alone can’t meet the growing need for prescribed fire. Local burn associations, Native American tribes, fire safe councils and programs like Firewise USA—a guide for residents on how to reduce wildfire risks in their own neighborhoods—can help bridge the gap. In California, between 10 million and 30 million acres could benefit from restoration thinning or prescribed fire.

So is that it? By no means. There's also regulation, and prescribed burns can take a while to clear federal NEPA permitting:

There are three levels of NEPA permitting, and the vast majority of prescribed burn plans qualify for light NEPA treatment. For these, permitting takes between zero and 20 months (blue bars). But that's not everything. Forest managers don't just wake up in the morning and decide to light a match in a likely looking bit of forest. As you can see in the chart (orange bars), there's a ton of planning that goes into these projects, and the planning takes longer than the permitting. Approximately the same thing is true of "mechanical treatment," an alternative (and costlier) way of reducing fuel load by cutting back trees and clearing underbrush.

And note that "permitting" is just the process of writing a plan. Contrary to popular opinion, lawsuits aren't a big part of it. Environmental groups do frequently sue over forest management projects, but only one or two a year are related to burns or mechanical treatments—and almost all of them lose. It's not really a thing in the big picture.

In California, CEQA adds to the delay in project approvals, but the biggest regulatory hurdle comes from air quality managers who restrict the number of burn days available. This is a bone of contention, however, and the air quality folks say that burn bosses don't even use all the days available to them.

Bottom line: regulatory hurdles are real for both prescribed burns and mechanical treatments, but they aren't the biggest obstacles by any means. The biggest impediments are public opposition, rising insurance costs, resource constraints, and the risk-averse views of forest managers, many of whom are still wary of prescribed burns. This is partly for technical reasons and partly out of fear. Only one out of a thousand prescribed burns gets out of control, but that's enough. Here is Michael Wara, a lawyer and the director of Stanford’s Climate and Energy Policy Program:

“If there’s a prescribed fire and it goes wrong, someone’s in trouble,” Wara said. In this case, it’s the federal government, which is on the hook for billions in recovery costs.

“But no one in the Forest Service gets in trouble when there’s a wildfire that destroys communities and destroys a landscape, and the fire really occurs because of the lack of management over decades,” Wara said.

The end result of these obstacles is that fewer acres are burned than burners would like:

However, California legislators have introduced a slow but steady stream of legislation to deregulate burns. The chart above (on right) is dated but provides an idea of how much legislative activity there is and how it goes up and down depending on current wildfire activity.

So that's about it. The main takeaway here is that to understand why prescribed burns are still underutilized you need to take a longer view. For example, it's worth knowing that wildfires began to increase dramatically only in the late '90s:

It's human nature that it takes time to react to something like this. Maybe the surge in the early 2000s was temporary? We'll see. Can it really be reined in with prescribed burns? We should investigate. It wasn't until around 2010 when it became clear that massive wildfire growth was here to stay and that both burning and brush clearing were necessary parts of fire management. And it took even longer for opposition to slowly erode and regulators to become more sympathetic. Those things have happened, and we're now making progress.

But it's slow. Only in the last few years has it become conventional wisdom that prescribed burns and mechanical treatments are obviously good things, so why the hell aren't we doing more of them? The answer comes only if you know some of the history behind the reluctance to change forest management practices. And keep in mind that the new conventional wisdom still comes with billions of dollars of cost and dangers of its own.

In other words, if you learned about prescribed burns and forest management just in the past few days—or even the past few years—rein in your outrage. You weren't there for the decades of conversation about it that led so tortuously to the current enlightened state you take for granted.

Henry George Liddell and Robert Scott’s Intermediate Greek-English Lexicon of 1851 contains a sobering entry:

ραφανιδοω: to thrust a radish up the fundament, a punishment of adulterers in Athens

In recalling this to friends at Christmas in 1972, historian John Julius Norwich wrote, “I’m sure it must once have been familiar to every schoolboy, and now that the classics are less popular than they used to be I should hate it to be forgotten.”

Editors of academic journals have been reporting that they find it increasingly hard to secure referees for papers that have been submitted to their journals. When I’ve been discussing this issue over the years with colleagues, I’ve heard a few remarks that made me wonder what our considerations are to decide whether or not to accept a review request. Clearly, there must be a content-wise fit: if one thinks the paper is outside one’s area of expertise, one should not accept the referee request. But then I have heard considerations such as “I decline because I have already refereed for this journal before”, or “I referee as many papers as I receive reports”, or “I referee 5 papers a year”. Are these valid reasons to decline?

Clearly, the answer cannot be that how much we choose to referee is purely a private affair. All academics would benefit if there would not be a shortage of referees, hence it cannot be a purely private affair. Yet the referee shortage takes the structure of a collective action problem. And we know that there are two principle ways to address collective action problems – either by having a collective decision maker (such as the government), which is not a solution available for this problem; or else by way of establishing a social norm.

Solving the referee crisis in academic peer review will require multiple measures, but when it comes to securing that enough people are willing to referee, I propose to discuss the number we should treat as the lower boundary of how much we should referee. Let’s call the number of reports a person writes for journals divided by the number of reports that person receives in response to their own paper submissions a person’s referee-ratio. I want to defend that the referee ratio should be at least 1.2. In other words, for every 4 reports we receive, we should write at least 5 (adjusted for the number of authors of a paper).

Why is that number not 1, as some seem to think? The first reason is that there are a number of reports being written by authors who will not be able to [fully] reciprocate. Think of PhD-students who submit their work, but are too junior to review themselves (they may become more sufficiently experienced towards the end of their PhD-trajectory, but I think it’s a reasonable assumption that some people submit to journals who do not have the skills and expertise (yet) to serve as referees). Some of them will submit for a few years and then stop doing academic research, and will at that point no longer be part of the system of peer-review.

The second reason is that there might be authors who temporarily should not be expected to reciprocate. I am thinking in particular of editors of journals, and associate editors with significant work loads, who are doing crucial work in making the system run in the first place. But if we agree on this, then it might well be the case that the minimal referee ratio should be 1.5 rather than 1.2 – I am not sure.

The third reason is that the system needs a bit of buffer in order to function. We need some oil to make the machine run smoothly. If everyone were to agree to adopt as a social norm that we should seek to have a referee ratio of minimally 1.2, then there would be more scholars who receive a referee request who would accept because the social norm tells them they should accept.

It is actually pretty easy to calculate our own referee ratio. Many people keep track of the papers they have refereed, often as part of their annual assessment conversations with their line managers. And it’s also quite easy to keep track of (or reconstruct for the past) the number of reports we have received. It might take us a few hours, but the potential objection that this is too burdensome isn’t very strong, I’d think.

My hope is that agreeing on a minimal referee ratio would help address the referee shortage problem. But it will also address the issue that some people are, qua character, much more prone to feel guilty if they decline a referee request. I am sadly in that camp (I generally blame it on having been raised in a Catholic culture). It has led to much agonizing, and contributed to an excessive work load, which has negatively affected my health. Once, when I was close to burn-out, I had a coach who told me to protect myself by quantifying upper limits to my professional commitments, because otherwise they would crush me. In short, people who are insufficiently able to say ‘no’ might be helped if they make the calculation and see their referee ratio is not around 1 but rather way over 2.

There are two alternatives that I can think of. One is to pay referees. But there are at least three reasons against this. First, it would increase the bureaucracy and paperwork involved in refereeing. Second, it is quite unfair against a background of huge inequalities in financial resources, especially on a global scale, but even within continents and countries. Third, it would commodify another aspect of academia – is this something we should want?

There are other strategies that journals can use that are complementary. Some journals now state that one can only submit if one is also willing to review for that journal; to me that seems absolutely reasonable. But if that were our only expression of reciprocity-duties, it would be too strict; for example, given my theoretical/conceptual expertise on the capability approach, I’ve reviewed papers that used that framework for a number of journals from other disciplines (such as Social Sciences and Medicine). So I think we should, to some extent, also be willing to review for journals that we will most likely never submit to, because it helps colleagues in other disciplines.

It might be that the number of 1.2 is not the right one. Perhaps it should be 1.5, or even 2. But the prior question is whether we agree there should be such a number that functions as a professional social norm. Or is there a better way to solve the referee crisis?